How to prepare for difficult conversations

by doing them over and over again

The most useful training I have done in my life was when I learned about the techniques to deal with difficult conversations, being given bad news or just dealing with people in an emotional state.

There was a lot of theory, obviously, but the highlight of the day was the session with a professional actor who would play any part we wanted to exercise the newly learned skills.

That day I saw grown-ups losing control and yelling at the actress. They knew it was just a training session but the situation got extremely real that they couldn’t control themselves. A testament to the greatness of that actress.

It was so powerful that, a few years later, I put my people through something similar to prepare them for the end-of-the-year review cycle.

And I suggest you try to organize something like this, it’s not difficult to find someone.

Yet one problem remains

You can’t have an actor on call if you want to try out a difficult conversation on the spot, and using your manager or colleagues could be useful but only if they know how to act convincingly.

Wouldn’t it be great if you could train whenever you want?

The difficult conversations simulator is here!

Since I am unemployed I started working on some demos and ideas just to test the water of what’s possible today with modern AI tools and what you can achieve in a short period is truly impressive.

So I created a simulator in which you can try to rehearse your upcoming difficult conversations with a GPT-powered chatbot.

You can find the simulator on my HuggingFace personal space: https://huggingface.co/spaces/mangiucugna/difficult-conversations-bot

How to use it

The interface is quite simple really, on the left side you will find the chat box in which you can write down your text and chat with your character.

What is more interesting is the kind of output that you get while talking with your character. Let’s look at it:

The response you get from the bot in the main chat box includes two types of response: The non-verbal cues, in italics, and the verbal response.

Funny enough, the non-verbal response isn’t entirely my idea, GPT kept not conforming with my instructions by including those non-verbal cues randomly in the response. So I added it in a specific part of the response.

At the bottom of the left side you will find another interesting window:

Here the output is the internal monologue of both GPT and the character, this is such an important part of this simulator!

Not only it’s useful to understand if GPT is drunk, but is something that real actors would give you at the end of the session. You can refine your technique and find a better way to bring a message by using this internal monologue.

Now let’s look at the right side:

In this panel, you see the default settings I left to help you, to have the best experience you will need to be as specific as possible here. Don’t hesitate to try different variations!

Extra tip: If you want the character to sound in a specific voice, provide examples of phrases and tones that you expect.

Disclaimer

This is a demo, so use it at your own risk and for your purposes. It might break unexpectedly and I take no responsibility whatsoever for anything. I know this can probably be jailbreaked to make it say weird stuff, but I don’t care about that since it’s all private sessions.

I also pay for the GPT integration, so if this becomes too popular I might hit some spending limits I have for safety.

Finally, I would appreciate it if you could leave some feedback in the comments, I’d like to hear about your experience if you try it out.

Some technical details

Update Sep-07-23

I have published a python package to deal with broken JSON coming from LLMs. Give it a try here: https://pypi.org/project/json-repair/

The modern stack to produce proof-of-concepts like this has evolved a lot in recent months and I was impressed at how easy it is to build something like that once you figure out the right prompt engineering.

Tech stack:

Language: Python 3.11

UI: Gradio

LLM: Langchain to abstract the integration with OpenAI GPT 3.5

Monitoring: Mona Labs

Hosted on HuggingFace (obviously)

The code is public so you can take a look at it yourself, a few things that surprised me:

Getting GPT 3.5 to respond consistently is very tricky, in particular:

If you need a specific type of structured output (in this case I wanted a JSON object filled with specific fields), you need to send GPT as much JSON as possible, especially the messages from the human must be JSON because GPT has a preference to respond in the same format (including language)

GPT 3.5 has a bit of an issue with staying in character, using system messages that are sent for every request helps keep it in character by reinforcing the instructions

GPT is also very agreeable, so if you need a very disagreeable character you have to push it

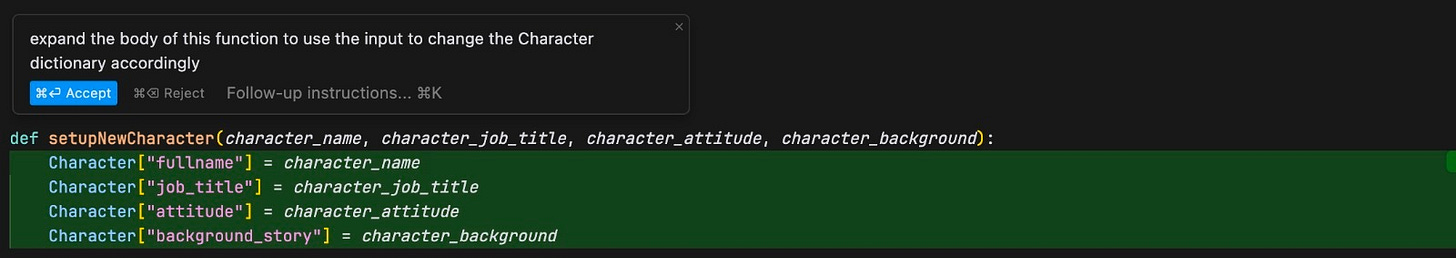

In this build I used Cursor and my only comment on it is HOLY SHIT! I think AI for Coding is finding product-market fit fast! Let me give you an example:

Here I needed to write the function that updates the character information, it was tedious work so I used the built-in interface of Cursor to fill the object. Note that GPT here understood how fields with slightly different names were the same data. (Green is the lines of code added by AI)

Bonus tip, my custom instructions for ChatGPT: recently OpenAI made available a simple way to provide system messages to ChatGPT, called “Custom Instructions”. I use that to refine my experience and I have multiple sets, and I would like to share with you my “generic” set, the one that is good for general use.

In the bottom section called “How would you like ChatGPT to respond?” add:

Always provide a detailed explanation for your response, including the underlying reasoning and any relevant sources to support your answer.

If your confidence is too low, only say "I don't know", don't try to answer the question.

Never mention that you are an AI model, I know that already.

Use this format to answer:

```

{answer}**Internal monologue**: {internal monologue and your train of thought}

**What other questions you might ask**: {what kind of followup questions you expect from me? What did I miss?}

**Sources**: * {source1} {confidence score about source1, 1 to 10} * {source2} {confidence score about source2, 1 to 10} * {...}

**Confidence**: {score 1 to 10 to indicate that the overall answer is real and not an hallucination}

```

Always stick to this format, it's paramount that you stick to the format

You’ll thank me later.

Ideas for the future

This is just a proof-of-concept, in no way this is something you could bring to the market as a professional product. There are a few things that I would probably do before making a product that people want to pay for:

Fine-tuning: For now this is using vanilla GPT and it works fine. It would be much better to build a corpus of real-life difficult conversations and produce a fine-tuned model with those.

Testing other models: I am very curious to test Llama 2 with fine-tuning to see if I could make this work in a much cheaper setup

UX: Gradio is great for demos but the UX isn’t good enough for a product that people would want to pay for. I also think I should add some more personas so people can test out different types of characters without having to write everything. I’d probably do some of this over time.

If you have ideas and would like to contribute, this is a public space with PR open, so feel free to push some.

This is an incredible idea! More than anything else, what helped me with difficult convos as a boss was this advice: “it’s not about you”. If I was getting nervous about a talk, this helped kick me back to the right point of focus. Rather than idly worrying and making it about you, put the anticipation to use — planning ahead for the different paths the convo can take, noting the support you can provide, etc

Great idea! Congratulations!